多地域zone分片

2022-09-05

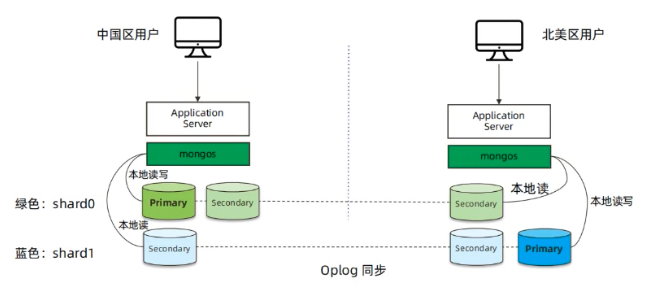

适合跨国企业、多地多写的情况;

可以配合dns,来实现本地读写;

实现步骤

# db01

su - mongod

mkdir -p /mongodb/{20001..20003}/{data,log}

cat > /mongodb/20001/mongod.conf <<EOF

systemLog:

destination: file

logAppend: true

path: /mongodb/20001/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /mongodb/20001/data

directoryPerDB: true

#engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

timeZoneInfo: /usr/share/zoneinfo

net:

port: 20001

bindIp: 0.0.0.0

replication:

oplogSizeMB: 2048

replSetName: CN_sh

sharding:

clusterRole: shardsvr

EOF

\cp /mongodb/20001/mongod.conf /mongodb/20002/

\cp /mongodb/20001/mongod.conf /mongodb/20003/

sed 's#20001#20002#g' /mongodb/20002/mongod.conf -i

# 需要注意的是,这里 20003 属于另外一个集群的 从,所以20003 的 replSetName: CN_sh 分片信息需要改一下

# 改为:replSetName: US_sh

sed 's#20001#20003#g' /mongodb/20003/mongod.conf -i

mongod -f /mongodb/20001/mongod.conf

mongod -f /mongodb/20002/mongod.conf

mongod -f /mongodb/20003/mongod.conf

# db02

su - mongod

mkdir -p /mongodb/{20001..20003}/{data,log}

cat > /mongodb/20001/mongod.conf <<EOF

systemLog:

destination: file

logAppend: true

path: /mongodb/20001/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /mongodb/20001/data

directoryPerDB: true

#engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

timeZoneInfo: /usr/share/zoneinfo

net:

port: 20001

bindIp: 0.0.0.0

replication:

oplogSizeMB: 2048

replSetName: US_sh

sharding:

clusterRole: shardsvr

EOF

\cp /mongodb/20001/mongod.conf /mongodb/20002/

\cp /mongodb/20001/mongod.conf /mongodb/20003/

sed 's#20001#20002#g' /mongodb/20002/mongod.conf -i

# 需要注意的是,这里 20003 属于另外一个集群的 从,所以20003 的 replSetName: CN_sh 分片信息需要改一下

# 改为:replSetName: CN_sh

sed 's#20001#20003#g' /mongodb/20003/mongod.conf -i

mongod -f /mongodb/20001/mongod.conf

mongod -f /mongodb/20002/mongod.conf

mongod -f /mongodb/20003/mongod.conf

# config server

su - mongod

mkdir -p /mongodb/{20004..20006}/{data,log}

cat > /mongodb/20004/mongod.conf <<EOF

systemLog:

destination: file

logAppend: true

path: /mongodb/20004/log/mongodb.log

storage:

journal:

enabled: true

dbPath: /mongodb/20004/data

directoryPerDB: true

#engine: wiredTiger

wiredTiger:

engineConfig:

cacheSizeGB: 1

directoryForIndexes: true

collectionConfig:

blockCompressor: zlib

indexConfig:

prefixCompression: true

processManagement:

fork: true

timeZoneInfo: /usr/share/zoneinfo

net:

port: 20004

bindIp: 0.0.0.0

replication:

oplogSizeMB: 2048

replSetName: config

sharding:

clusterRole: configsvr

EOF

\cp /mongodb/20004/mongod.conf /mongodb/20005/

\cp /mongodb/20004/mongod.conf /mongodb/20006/

sed 's#20004#20005#g' /mongodb/20005/mongod.conf -i

sed 's#20004#20006#g' /mongodb/20006/mongod.conf -i

mongod -f /mongodb/20004/mongod.conf

mongod -f /mongodb/20005/mongod.conf

mongod -f /mongodb/20006/mongod.conf

# 复制集配置

mongo --port 20001

use admin

config = {_id: "CN_sh", members: [

{_id: 0, host: '172.16.40.143:20001'},

{_id: 1, host: '172.16.40.143:20002'},

{_id: 2, host: '172.16.40.144:20003'}

]}

rs.initiate(config)

mongo --port 20001

use admin

config = {_id: "US_sh", members: [

{_id: 0, host: '172.16.40.144:20001'},

{_id: 1, host: '172.16.40.144:20002'},

{_id: 2, host: '172.16.40.143:20003'}

]}

rs.initiate(config)

mongo --port 20004

use admin

config = {_id: "config", members: [

{_id: 0, host: '172.16.40.145:20004'},

{_id: 1, host: '172.16.40.145:20005'},

{_id: 2, host: '172.16.40.145:20006'}

]}

rs.initiate(config)

# mongos 配置

mkdir -p /mongodb/20010/{data,log}

cat > /mongodb/20010/mongod.conf <<EOF

systemLog:

destination: file

logAppend: true

path: /mongodb/20010/log/mongodb.log

processManagement:

fork: true

timeZoneInfo: /usr/share/zoneinfo

net:

port: 20010

bindIp: 0.0.0.0

sharding:

configDB: config/172.16.40.145:20004,172.16.40.145:20005,172.16.40.145:20006

EOF

mongos -f /mongodb/20010/mongod.conf

(2.

mkdir -p /mongodb/20011/{data,log}

cat > /mongodb/20011/mongod.conf <<EOF

systemLog:

destination: file

logAppend: true

path: /mongodb/20011/log/mongodb.log

processManagement:

fork: true

timeZoneInfo: /usr/share/zoneinfo

net:

port: 20011

bindIp: 0.0.0.0

sharding:

configDB: config/172.16.40.145:20004,172.16.40.145:20005,172.16.40.145:20006

EOF

mongos -f /mongodb/20011/mongod.conf

# 将分片节点加入集群

use admin

db.runCommand({ addshard:"CN_sh/172.16.40.143:20001,172.16.40.143:20002,172.16.40.144:20003",name:"CN_sh"})

db.runCommand({ addshard:"US_sh/172.16.40.144:20001,172.16.40.144:20002,172.16.40.143:20003",name:"US_sh"})

# 查看集群信息

mongos> sh.status()

--- Sharding Status ---

sharding version: {

"_id" : 1,

"minCompatibleVersion" : 5,

"currentVersion" : 6,

"clusterId" : ObjectId("6315c1bd186b87fad5b2a3a2")

}

shards:

{ "_id" : "CN_sh", "host" : "CN_sh/172.16.40.143:20001,172.16.40.143:20002,172.16.40.144:20003", "state" : 1 }

{ "_id" : "US_sh", "host" : "US_sh/172.16.40.143:20003,172.16.40.144:20001,172.16.40.144:20002", "state" : 1 }

active mongoses:

"4.4.14" : 2

# 可以看到 两个 shard 集群已经添加进去了;

# 接下来就是定制分片信息;

# 给分片打标签

mongos> sh.addShardTag("CN_sh","ASIA")

mongos> sh.addShardTag("US_sh","AMERICA")

# 配置分片策略

# 先要激活库 分片的功能

# 比如激活 crm 库的分片功能

mongos> db.runCommand({enablesharding: "true"})

# 分片策略

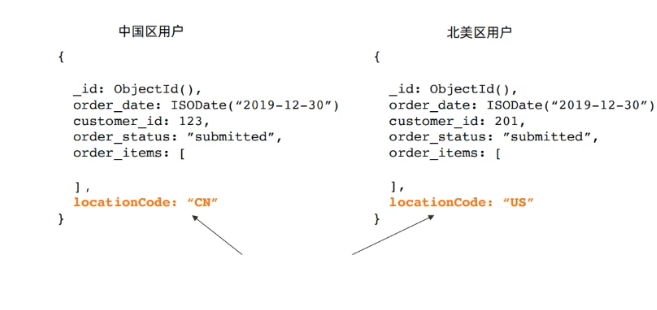

sh.addTagRange("crm.orders", // crm 库的 orders 表

{"locationCode":"CN","order_id":MinKey}, // 基于 locationCode 列进行分片,一旦匹配 CN ,就写入标签为 ASIA 的分片中

{"locationCode":"CN","order_id":MaxKey},

"ASIA")

sh.addTagRange("crm.orders",

{"locationCode":"US","order_id":MinKey},

{"locationCode":"US","order_id":MaxKey},

"AMERICA")

sh.addTagRange("crm.orders",

{"locationCode":"CA","order_id":MinKey},

{"locationCode":"CA","order_id":MaxKey},

"AMERICA")

# 数据插入

# 数据插入肯定需要有这两列

order_id, locationCode

# 查看集群信息

mongos> sh.status()

balancing: true

chunks:

CN_sh 879

US_sh 145

# 可以看到,分布了一些 chunks

这里边应该有个 order_id,如上截图没有,别被误导;这里只是举个例子;

### zone 方式手工进行range定制分片

# mongod 4.0 之后支持zone方式

== 对分片表建立分片键索引

mongo --port 20010 admin

use zonedb

db.vast.ensureIndex({ order_id:1})

-- 激活表所在库的分片功能,设置表的分片规则

use admin

db.runCommand({enablesharding: "zonedb"})

sh.shardCollection("zonedb.vast", {order_id: 1});

sh.addShardTag("CN_sh", "shard00")

sh.addShardTag("US_sh", "shard01")

sh.addTagRange(

"zonedb.vast",

{"order_id": MinKey},

{"order_id": 500}, "shard00")

sh.addTagRange(

"zonedb.vast",

{"order_id": MinKey},

{"order_id": 501}, "shard01")

use zonedb

for(i=1;i<1000,i++){db.vast.insert({"order_id":i, "name": "shenzheng", "age":70, "date":new Date()});}

db.vast.getShardDistribution()